Difference between revisions of "Django"

Adelo Vieira (talk | contribs) |

Adelo Vieira (talk | contribs) |

||

| Line 620: | Line 620: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | * After the <code>core</code> app is added to our project, we add it to <code>INSTALLED_APPS</code> in <code>settings.py</code> | |

| − | After the <code>core</code> app is added to our project | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

:<syntaxhighlight lang="python3"> | :<syntaxhighlight lang="python3"> | ||

INSTALLED_APPS = [ | INSTALLED_APPS = [ | ||

| Line 642: | Line 632: | ||

] | ] | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |||

| + | * We delete: | ||

| + | : <code>core/test.py</code> | ||

| + | : <code>core/views.py</code> | ||

| + | |||

| + | * We add: | ||

| + | : <code>core/test/</code> | ||

| + | : <code>core/test/__init__.py</code> | ||

| + | : <code>core/management/</code> | ||

| + | : <code>core/management/__init__.py</code> | ||

| + | : <code>core/management/commands/</code> | ||

| + | : <code>core/management/commands/wait_for_db.py</code> | ||

| + | :* Because of this directory structure, django will automatically recognize <code>wait_for_db.py</code> as a management command, that we'll be able to run using <code>python manage.py</code> | ||

| + | |||

</blockquote> | </blockquote> | ||

Revision as of 15:27, 7 May 2023

https://www.djangoproject.com/

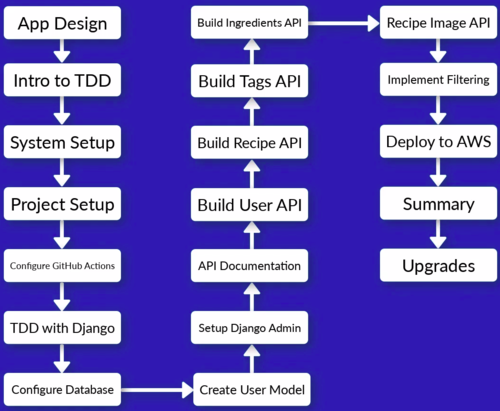

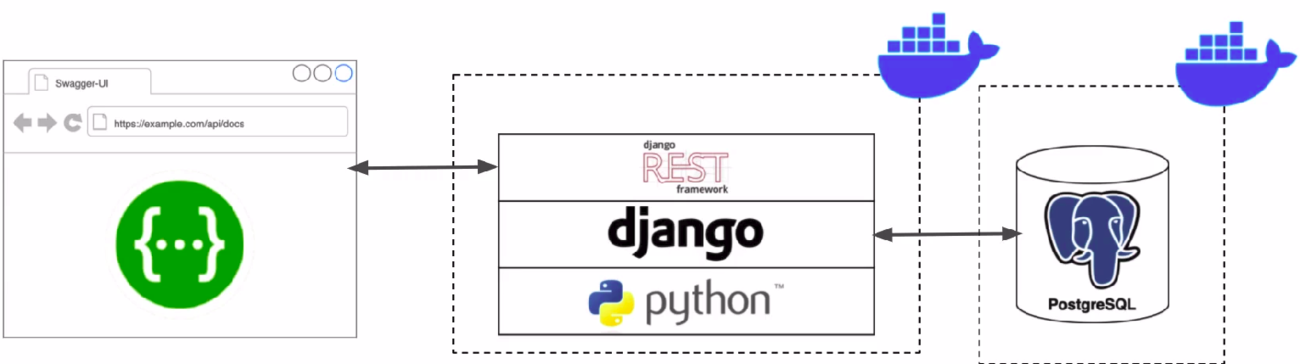

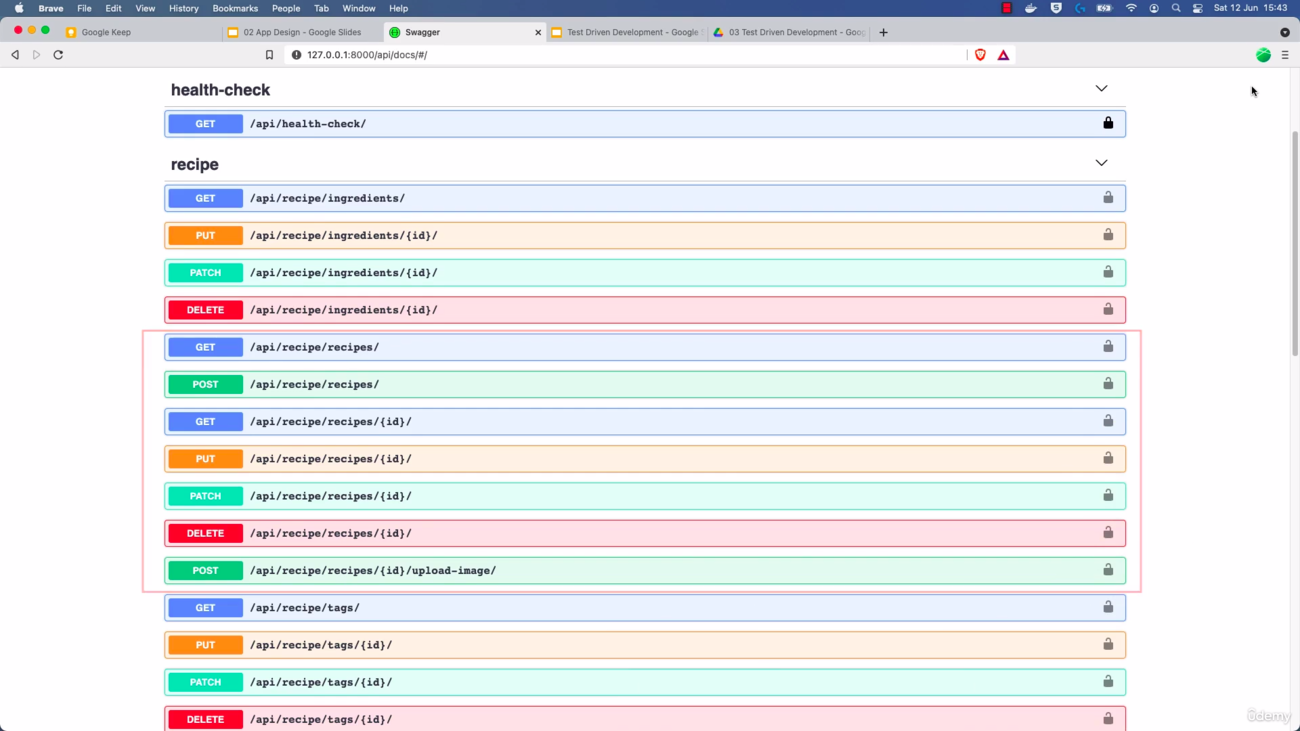

Udemy course: Build a Backend REST API with Python Django - Advanced

In this course we build a Recipe REST API

https://www.udemy.com/course/django-python-advanced/

|

Techologies used in this course: |

API Features: |

Structure of the project: |

|

|

|

Contents

Docker

Some benefits of using Docker Docker#Why use Docker

Drawbacks of using Docker in this project: (Not sure if these limitations are still in place)

- VSCode will be unable to access interpreter

- More Difficult to use integrated features, such as the Interactive debugger and the Linting tools.

How will be used Docker in this project:

- Create a

Dockerfile: This is the file that contains all the OS level dependencies that our project needs. It is just a list of steps that Docker use to create an image for our project:

- Firs we choose a base image, which is the Python base image provided for free in DockerHub.

- Install dependencies: OS level dependencies.

- Setup users: Linux users needed to run the application

- Create a Docker Compose configuration

docker-compose.yml: Tells Docker how to run the images that are created from our Docker file configuration.

- We need to define our "Services":

- Name (We are going bo be using the name

app) - Port mappings

- Name (We are going bo be using the name

- Then we can run all commands via Docker Compose. For example:

docker-compose run --rm app sh -c "python manage.py collectstatic"

docker-composeruns a Docker Compose commandrunwill start a specific container defined in config--rm: This is optional. It tells Docker Compose to remove the container ones it finishes running.app: This is the name of the app/service we defined.sh -cpass in a shell command

Docker Hub

Docker Hub is a cloud-based registry service that allows developers to store, share, and manage Docker images. It is a central repository of Docker images that can be accessed from anywhere in the world, making it easy to distribute and deploy containerized applications.

Using Docker Hub, you can upload your Docker images to a central repository, making it easy to share them with other developers or deploy them to production environments. Docker Hub also provides a search function that allows you to search for images created by other developers, which can be a useful starting point for building your own Docker images.

Docker Hub supports both automated and manual image builds. With automated builds, you can connect your GitHub (using Docker on GitHub Actions) or Bitbucket repository to Docker Hub and configure it to automatically build and push Docker images whenever you push changes to your code. This can help streamline your CI/CD pipeline and ensure that your Docker images are always up-to-date.

Docker Hub has introduced rate limits:

- 100 pulls/6hr for unauthenticated users (applied for all users)

- 200 pulls/6hr for authenticated users (for free) (only for your users)

- So, we have to Authenticate with Docker Hub: Create an account / Setup credentials / Login before running job.

Docker on GitHub Actions

Docker on GitHub Actions is a feature that allows developers to use Docker containers for building and testing their applications in a continuous integration and delivery (CI/CD) pipeline on GitHub.

With Docker on GitHub Actions, you can define your build and test environments using Dockerfiles and Docker Compose files, and run them in a containerized environment on GitHub's virtual machines. This provides a consistent and reproducible environment for building and testing your applications, regardless of the host operating system or infrastructure.

Docker on GitHub Actions also provides a number of pre-built Docker images and actions that you can use to easily set up your CI/CD pipeline. For example, you can use the "docker/build-push-action" action to build and push Docker images to a container registry, or the "docker-compose" action to run your application in a multi-container environment.

Using Docker on GitHub Actions can help simplify your CI/CD pipeline, improve build times, and reduce the risk of deployment failures due to environmental differences between development and production environments.

Common uses for GitHub actions are:

- Deployment

- Handle Code linting (Cover in this course)

- Run Unit test (Cover in this course)

To configure GitHub Actions, we start by setting a Trigger. There are different Triggers that are documented on the GitHub website.

The Trigger that we are going to be using is the «Push to GitHub» trigger:

- Whenever we push code to GitHub. It automatically will run some actions:

- Run Unit Test

- After the job run there will be an output/result: success/fail...

GitHub Actions is charged per minute. There are 2000 free minutes for all free accounts.

Unit Tests and Test-driven development (TDD)

See explanation at:

- https://www.udemy.com/course/django-python-advanced/learn/lecture/32238668#notes

- https://www.udemy.com/course/django-python-advanced/learn/lecture/32238780#learning-tools

Unit Tests: Code which test code. It's usually done this way:

- You set up some conditions; such as inputs to a function

- Then you run a piece of code

- You check outputs of that code using "assertions"

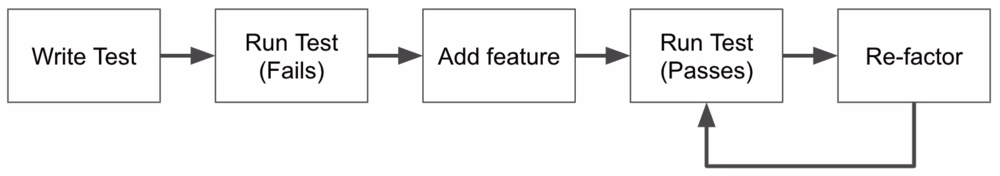

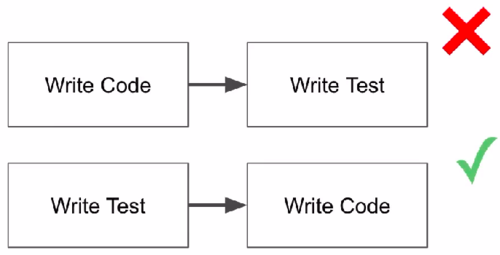

Test-driven development (TDD)

Setting the development environment

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238710#overview

- Go to https://github.com and create a repository for the project and clone it into your local machine. See GitHub for help:

https://github.com/adeloaleman/django-rest-api-recipe-app

git clone git@github.com:adeloaleman/django-rest-api-recipe-app.git

- Then we create the app directory inside our project directory

mkdir app

- Go to DockerHub https://hub.docker.com : login into your account. Then go to

Account settings > Security > New Access Token:

- Access token description: It's good practice to use the name of your github project repository:

django-rest-api-recipe-app - Create and copy the Access token.

- This Token will be used by GitHub to get access to you DockerHub account and build the Docker container.

- Access token description: It's good practice to use the name of your github project repository:

- Go to your project's GitHub repository : https://github.com/adeloaleman/django-rest-api-recipe-app >

Settings > Secrets and variables > Actions:

New repository secret: First we add the user:

- Name:

DOCKERHUB_USER - Secret: This must be your DockerHub user. In my case (don't remember why is my C.I.):

16407742

- Name:

- Then we click again

New repository secretto add the token:

- Name:

DOCKERHUB_TOKEN - Secret: This must be the DockerHub Access token we created at https://hub.docker.com:

***************

- Name:

- This way, GitHub (through Docker on GitHub Actions) is able to authenticate and gets access to DockerHub to build the Docker container.

- Go to your local project's directory and create

requirements.txt

Django>=3.2.4,<3.3 djangorestframework>=3.12.4,<3.13This tells pip that we want to install at least version 3.2.4 (which is the last version at the moment of the course) but less than 3.3. This way we make sure that we get the last 3.2.x version. However, if 3.3 is released, we want to stay with 3.2.x cause this important version change could introduce significant changes that may cause our code to fail"

- Now we are going to add Linting:

Linting is running a tool that test our code formatting. It highlights herror, typos, formatting issues, etc. We are going to be handling Linting like this:

Installflake8package. To do so, create the filerequirements.dev.txt.

- The reason why we are creating a new «requirement» file is because we are going to add a custom state to our Docker Compose so we only install these development requirements when we are building an image for our local development server. This is because we don't need the «flake8» package when we will deploy our application. We only need Linting for development.

- It is good practice to separate development dependencies from the actual project dependencies so you don't introduce unnecessary packages into the image that you will deploy in your deployment server.

requirements.dev.txt:flake8>=3.9.2,<3.10

Now wee need to add the defaultflake8conf file, which is.flake8and musb be placed inside ourappdirectory. We're gonna use it to manage exclusions. We only want to do Linting in the files we code.

app/.flake8:[flake8] exclude = migrations, __pycache__, manage.py, settings.py

- Go to your local project's directory and create

Dockerfile

FROM python:3.9-alpine3.13 LABEL maintainer="adeloaleman" ENV PYTHONUNBUFFERED 1 COPY ./requirements.txt /tmp/requirements.txt # This copy the file into the Docker container COPY ./requirements.dev.txt /tmp/requirements.dev.txt # We have separated development requirements from deployment requirements for the reasons that will be explained below COPY ./app /app WORKDIR /app # This is the default directory where our commands are gonna be run when we run commands on our Docker image EXPOSE 8000 # This is the port we are going to access in our container ARG DEV=false RUN python -m venv /py && \ # Creates a virtual env. Some people says it's not needed inside a Container /py/bin/pip install --upgrade pip && \ # Upgrade pip /py/bin/pip install -r /tmp/requirements.txt && \ # Install our requirements if [ $DEV = "true" ]; \ # We install development dependencies only if DEV=true. This is the case where the container is built through our Docker Compose (see comments below). then /py/bin/pip install -r /tmp/requirements.dev.txt ; \ fi && \ rm -rf /tmp && \ # rm /tmp cause it's not needed anymore adduser \ # We add this user because it's good practice not to use the root user --disabled-password \ --no-create-home \ django-user ENV PATH="/py/bin:$PATH" # This is to avoid specify /py/bin/ every time that we want to run a command from our virtual env USER django-user # Finally, we switch the user. The above commands were run as root but in the end, we switch the user so commands executed later will be run by this user

python:3.9-alpine3.13: This is the base image that we're gonna be using. It is pulled from Docker Hub.

pythonis the name of the image and3.9-alpine3.13is the name of the tag.alpineis a light version of Linux. It's ideal to build Docker containers cause it doesn't have any unnecessary dependencies, which makes it very light and efficient..- You can find all the Images/Tags at https://hub.docker.com

ARG DEV=false: This specify that it is not for Development. This will be overridden in our docker-compose.yml by « - DEV=true ». So when we build the container through our Docker Compose, it will be set as «DEV=tru» but by default (without building it through Docker Compose) it is set as « ARG DEV=false »

.dockerignore: We want to exclude any file that Docker doesn't need to be concerned with

# Git .git .gitignore # Docker .docker # Python app/__pycache__/ # We want to exclude this because it could cause issues. The __pycache__ that is created in our local machine, app/*/__pycache__/ # would maybe be specifically for our local OS and not for the container OS app/*/*/__pycache__/ app/*/*/*/__pycache__/ .env/ .venv/ venv/

- At this point we can test building our image. For this we run:

docker build .

docker-compose.yml

version: "3.9" # This is the version of the Docker Compose syntax. We specify it in case Docker Compose release a new version of the syntaxw services: # Docker Compose normally consists of one or more services needed for our application app: # This is the name of the service that is going to run our Dockerfile build: context: . # This is to specify the current directory args: - DEV=true # This overrides the DEV=false defined in our Dockerfile so we can make distinctions between the building of our development Container and the Deployment one (See comments above) ports: # This maps port 8000 in our local machine to port 8000 in our Docker container - "8000:8000" volumes: - ./app:/app command: > sh -c "python manage.py runserver 0.0.0.0:8000"

- ./app:/app: This maps the app directory created in our local machine with the app directory of our Docker container. We do this because we want the changes made in our local directory to be reflected in our running container in real-time. We don't want to rebuild the Container every time we change a line of code.

command: >: This is going to be the command to run the service. That is to say, the command that «docker-compose» run by default. I'm not completely sure but I think that when we run «docker-compose»; it actually runs the command specified here. We can override this command by using «docker-compose run...», which is something we are going to be doing often.

- Then we run in our project directory:

docker-compose build

- Run

flake8through our Docker Compose:

docker-compose run --rm app sh -c "flake8"

- Now we can create our

Djangoproject through our Docker Compose:

docker-compose run --rm app sh -c "django-admin startproject app ." # Because we have already created an app directory, we need to add the «.» at the end so it won't create an extra app directory

- Unit Testing: We are going to use

Django test suite:

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238780#learning-tools

The Django test suite/framework is built on top of the

unittestlibrary. Django test adds features such as:

- Test client: This is a dummy web brower that we can use to make request to our project.

- Simulate authentication

- Test Database (Test code that uses the DB): It automatically creates a temporary database and will clear the data from the database once you've finished running each test so it make sure that we have a fresh database for each test. We usually don't want to create tests data inside the real database. It is possible to override this behavior so that you have consistent data for all of your tests. However, it's not really recommended unless there's a specific reason to do that.

- On top of Django we have the Django REST Framework, which also adds some features:

- API test client. Which is like the Test client add in Django but specifically for testing API requests: https://www.udemy.com/course/django-python-advanced/learn/lecture/32238810#learning-tools

- Test classes:

SimpleTestCase: No DB integration.TestCase: DB integration.

- Where do yo put test?

- When you create a new app in Django, it automatically adds a

test.pymodule. This is a placeholder wehre you can add tests.- Alternatively, you can create a

test/subdirectory inside your app, which allows you to split your test up into multiple different modules.- You can only use either the

test.pymodule or thetest/directory. You can't use them both.- If you do create tests inside your

test/directory, each module must be prefixed by thetest_.- Test directories must contain

__init__.py. This is what allows Django to pick up the tests.

- Mocking:

- https://www.udemy.com/course/django-python-advanced/learn/lecture/32238806#learning-tools

- Mocking is a technique used in software development to simulate the behavior of real objects or components in a controlled environment for the purpose of testing.

- In software testing, it is important to isolate the unit being tested and eliminate dependencies on external systems or services that may not be available or that may behave unpredictably. Mocking helps achieve this by creating a mock or a simulated version of the dependencies that are not available, allowing developers to test their code in isolation.

- We're going to implement Mocking useing the

unittest.mocklibrary.

- We're going to setup Tests for each Django app that we create.

- We will run the Tests through Docker Compose:

docker-compose run --rm app sh -c "python manage.py test"

- Now we can run our development server through Docker Compose:

docker-compose up

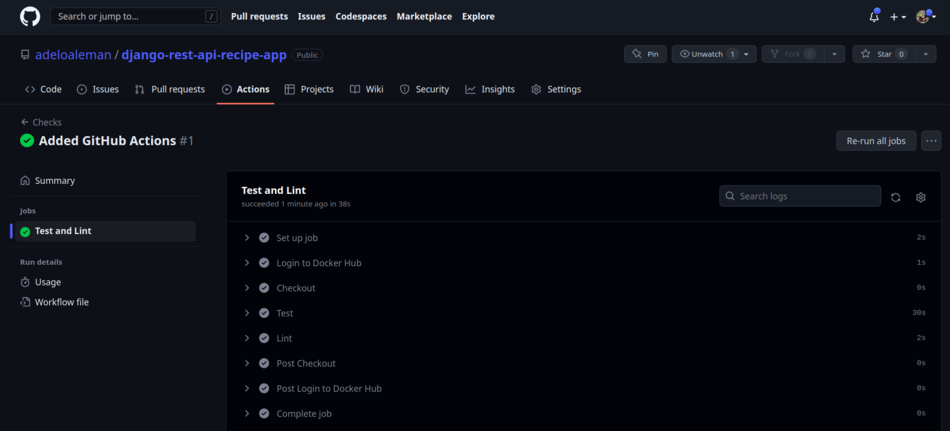

- Configuring GitHub Actions to run automatic task whenever we modify our project:

Create a config file at

django-rest-api-recipe-app/github/workflows/checs.yml(checscan be replaced for anything we want)--- # This specify that it is a .yml ile name: Checks on: [push] # This is the Trigger jobs: test-lint: name: Test and Lint runs-on: ubuntu-20.04 steps: - name: Login to Docker Hub uses: docker/login-action@v1 with: username: ${{ secrets.DOCKERHUB_USER }} # These are the secrets that we have configured above in our GitHub repository using the USER and TOKEN created in DockerHub password: ${{ secrets.DOCKERHUB_TOKEN }} - name: Checkout uses: actions/checkout@v2 # This allows us to check out our repository's code into the file system of the GitHub Actions Runner. In this case the ubuntu-20.04 VM - name: Test run: docker-compose run --rm app sh -c "python manage.py test" - name: Lint run: docker-compose run --rm app sh -c "flake8"

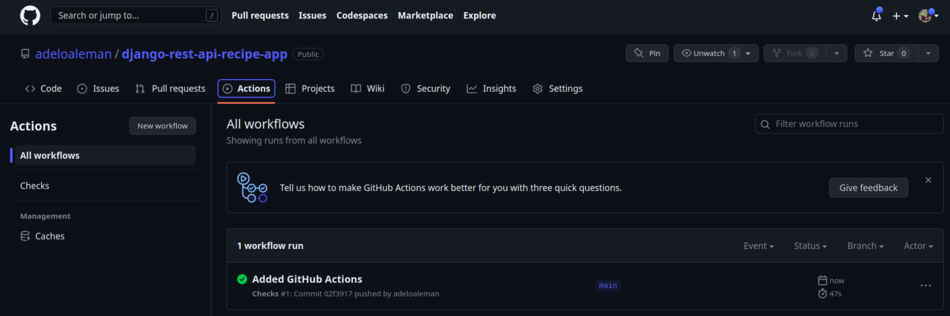

Test the GitHub actions:

- We go to to our GitHub repository: https://github.com/adeloaleman/django-rest-api-recipe-app >

Actions:

- We'll see that there is no actions yet in the Acctions page.

- In our local machine we commit our code:

git add . git commit -am "Added GitHub Actions" git push origin

- Then, if we come back to our

GitHub repository > Actionswe'll see the execution ofchecs.yml:

- Test driven development with Django (TDD):

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238804#learning-tools

As we already mentioned, we are going to use Django test framework...

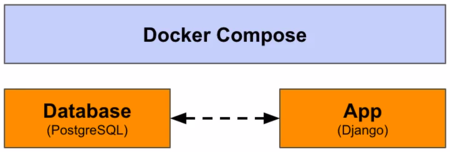

- Configuring the DB:

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238814#learning-tools

- PostgreSQL

- We're gonna use Docker Compose to configure the DB:

- Defined with project (re-usable): Docker Compose allows us to define the DB configuration inside our project.

- Persistent data using volumes: In our local DB we can persist the data until we want to clear it.

- Maps directory in container to local machine.

- It handles the network configuration.

- It allows us to configure our settings using Environment variable configuration.

- Adding the DB in our Docker Compose:

- Then, to make sure the DB configuration is correct by running our server:

- DB configuration in Django:

- Install DB adaptor dependencies: Tools Django uses to connect (Postgres adaptor).

- We're goona use the

psycopg2library/adaptor.- Package dependencies for

psycopg2:

- List of package in the official

psycopg2documentation:C compiler/python3-dev/libpq-dev- Equivalent packages for Alpine:

postgresql-client/build-base/postgresql-dev/musl-dev

- Update

Dockerfile

- Update

requirements.txt

- Then we can rebuild our container:

docker-compose down # This clears the containers (no me queda claro si hay que hacerlo siempre) docker-compose build

- Confiure Django: Tell Django how to connect https://www.udemy.com/course/django-python-advanced/learn/lecture/32238826#learning-tools

- We're gonna pull config values from environment variables

- This allows to configure DB variables in a single place and it will work for development and deployment.

settings.py

- Fixing DB race condition

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238828#learning-tools

depends_onmakes sure the service starts; but doesn't ensure the application (in this case, PostgreSQL) is running. This can cause the Django app to try connecting to the DB when the DB hasn't started, which would cause our app to crash.

- The soluciont is to make Django

wait for db. This is a custom django management command that we're gonna create.

- Before we can creaate our

wait for db, we need to add a new App to our project. We're goona call itcoredocker-compose run --rm app sh -c "python manage.py startapp core"

- After the

coreapp is added to our project, we add it toINSTALLED_APPSinsettings.py

- We delete:

core/test.pycore/views.py

- We add:

core/test/core/test/__init__.pycore/management/core/management/__init__.pycore/management/commands/core/management/commands/wait_for_db.py

- Because of this directory structure, django will automatically recognize

wait_for_db.pyas a management command, that we'll be able to run usingpython manage.py